I think I've lost count of the number of times I've told people I'm just waiting for the day that I can download my mind into a giant robot & run around wrecking stuff up (or more lately, that we could upload ourselves into a world where we can all be giant robots, or anything else for that matter), but this has always been a "one day" dream; something I might live to see, with luck. Now, however, we may find ourselves on the brink of seeing a whole new form of intelligent life emerge under our noses (and above our heads), combining many aspects of these ideas within a handful of years. The thing is, are we ready for it yet?

|

| Even with a direct brain hookup, it's still Pong for one. - Image: Danshope.com / Wired.com |

|

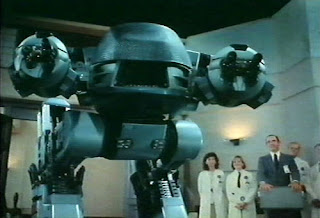

| This is what the boardroom at Dalek HQ looks like. - Images: New York Times |

The catch in this experiment however is that, in order to gain the needed precision, volunteers had to be strapped into an MRI machine for the duration, so that the tiny electrical activations within their brains could be monitored in real-time, and in detail. With its noise, close confinement & expense, such a system is something that would seem only to hold any attraction or use for day-players and tourists. Even with a computer body somewhere else to call your own, the sense of confinement would soon have people racing back to their real body and the real world.

But that's only if they have a real world to return to.

Around the world millions of people are paralysed, permanently bedridden or even "locked in", with little or no possible communication with the outside world; they have no "real world" to speak of. Many face the stark reality of living simply as the alternative to being dead, and some would rather choose the latter, when the former may sometimes only differ from it in brain-state and pulse rate. In recent years technology has offered the "locked-in" a chance to communicate with the outside world through sensors that read brain activity or tiny physical movements and allow a computer program to generate text or speech. Indeed, such a system has been on public display for many years through the "voice" of Stephen Hawking (who has, incidentally, repeatedly refused to upgrade his voice to a more realistic tone; having come to accept his distinctive tinny vocalisations as a part of who he is).

What if "surrogate" technologies such as the experiment mentioned above could allow the "locked in" a third choice, beyond death or survival? What if they could get outside, get a job and rejoin the wider world... as a robot?

Of course at first the idea seems absurd. The person in question wouldn't be a robot any more than a person playing a computer game or driving a remote controlled toy "is" their character or vehicle; the thing is, by many definitions they're not currently living the life of a person either. For a healthy person the idea of spending whole days, let alone every waking hour, lying on a slab, hooked up to machines, is something most would not want to consider. But for those already so confined, this might represent a previously impossible escape into a wider world.

For the last few decades (arguably back as far as WW2 research teams have been working on remote-controlled robots (generally known as Waldo units) to undertake specialised jobs too dangerous or even not physically possible for a human body to perform. These range from firefighting, medical applications, logistics, search & rescue, working in radioactive environments and mapping, to exploration and even warfare. To date these have always been operated by guys with joysticks (or occasionally a neural hook-up): "day players", who log off and go home at the end of their shift. So what of the potential for "residential" robot jockeys?

Trapped in their own minds at present, here are people often desperate to interact with the world but with little hope of ever doing so. Given access to a remote body, and with no body of their own, as far as physical action is concerned, they could take up permanent residence. All at once they would not so much be locked into their body, as voluntarily locked out of it. An instant WALDO generation.

With little technological progress from where we are today, we could find ourselves sharing our streets with human minds in robot bodies, long before the innovations or surgical techniques required to do so physically are even on the horizon. In 1987 Robocop (and later its dubious sequels) asked about the psychological and societal effects of trying to make a man into a robot. Now I'm left with an interesting thought: what if ED 209 was a person? What if the giant (or small) robots roaming our streets were the eyes, ears, hands and feet (and antennae) of real people, far away? If ED 209 was just Ed, with a wife, two kids and a mortgage; and your roomba used to play jazz guitar?

Legally, the way is already clear, even if it might require a little polishing. Crimes committed by a surrogate (under direct control, of course) would be attributed to the "pilot"; if possibly via conditions concerning vehicles, objects or weapons. Crimes committed against surrogates would currently only constitute property damage as, psychological trauma aside, the "pilot" would not be physically hurt (developments in haptic technology notwithstanding). But how would the world react to the first crimes committed by embodied surrogates? When the first killing spree is carried out not by a madman with a gun but by the mind within a warplane or a tank?

The main issues I can foresee are societal. How quickly would/could we adjust to the idea that the robots around us aren't just smart but are actually people - ghosts in a machine? Could we get used to people changing bodies the way we currently change jobs? Would this effect be beneficial for the development and adoption of Artificial Intelligence, or shove it back into a small niche for those developing uses for which full autonomy is required, or wireless link-ups are not practical?

Far from being a a nebulous "what-if" for sci-authors to speculate on, our robotic future may be waiting patiently in the (hospital) wings. You may not be able to download your mind into a giant robot just yet...

But you'll be able to stream it.